About

Welcome to the NeurIPS 2022 Workshop on Machine Learning for Autonomous Driving!

Autonomous vehicles (AVs) offer a rich source of high-impact research problems for the machine learning (ML) community; including perception, state estimation, probabilistic modeling, time series forecasting, gesture recognition, robustness guarantees, real-time constraints, user-machine communication, multi-agent planning, and intelligent infrastructure. Further, the interaction between ML subfields towards a common goal of autonomous driving can catalyze interesting inter-field discussions that spark new avenues of research, which this workshop aims to promote. As an application of ML, autonomous driving has the potential to greatly improve society by reducing road accidents, giving independence to those unable to drive, and even inspiring younger generations with tangible examples of ML-based technology clearly visible on local streets. All are welcome to attend! This will be the 7th NeurIPS workshop in this series. Previous workshops in 2016, 2017, 2018, 2019, 2020, 2021 enjoyed wide participation from both academia and industry.

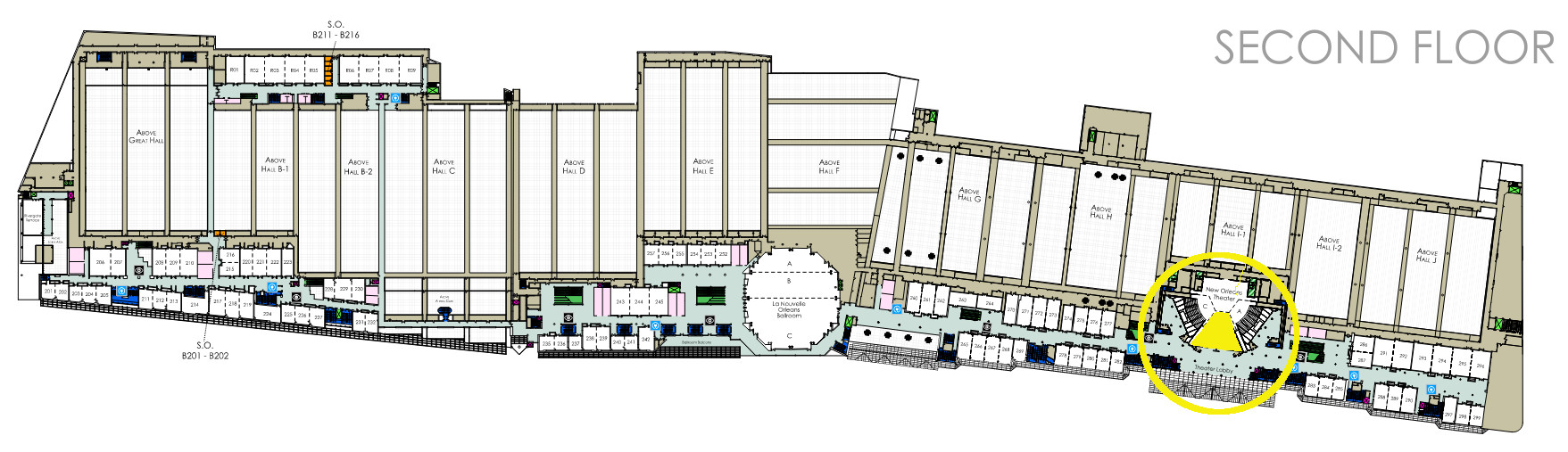

Attending: 1. Register for NeurIPS 2. Authors bring or print a poster onsite (use thin paper, not laminated, and no larger than 24 inches wide x 36 inches high) 3a. Attend in person: Come find us in Theater B located on the 2nd floor of the New Orleans Convention Center 3b. Attend virtually: Watch talks live from our NeurIPS Portal, ask questions in the "Chat" window, and meet authors at our Gather Town poster sessions at 10:15, 12:10, 14:10 CST

Travel Awards: you can apply for financial support to attend our workshop here.

Contact: ml4ad2022@googlegroups.com

Post Event: all videos now avilable on SlidesLive and YouTube

Dates

Papers

Challenge

Workshop

Speakers

Morning speakers

-

Hang Zhao

Assistant Professor

Tsinghua University -

Bo Li

Assistant Professor

University of Illinois, Urbana-Champaign -

Bolei Zhou

Assistant Professor

University of California, Los Angeles -

Yuning Chai

Head of AI Research

Cruise

Afternoon speakers

-

Nick Rhinehart

Postdoctoral Researcher

University of California, Berkeley -

Liting Sun

Research Scientist

Waymo -

Stewart Worrall

Senior Research Fellow

The University of Sydney

Submissions

Submission deadline: 1st October 2022 at 23:59 Anywhere on Earth

Submission website: https://cmt3.research.microsoft.com/ML4AD2022

Submission format: either extended abstracts (4 pages) or full papers (8 or 9 pages) anonymously using:

• neurips_2022_ml4ad.tex -- LaTeX template

• neurips_2022_ml4ad.sty -- style file for LaTeX 2e

• neurips_2022_ml4ad.pdf -- example PDF output

References and appendix should be appended into the same (single) PDF document, and do not count towards the page count.

We invite submissions on machine learning applied to autonomous driving, including (but not limited to):

• Supervised scene perception and classification

• Unsupervised representation learning for driving

• Behavior modeling of pedestrians and vehicles

• Gesture recognition

• Uncertainty propagation through AV software pipelines

• Metrics for autonomous driving

• Benchmarks for autonomous driving

• Real-time inference and prediction

• Causal modeling for multi-agent traffic scenarios

• Robustness to out-of-distribution road scenes

• Imitative driving policies

• Transfer learning from simulation to real-world (Sim2Real)

• Coordination with human-driven vehicles

• Coordination with vehicles (V2V) or infrastructure (V2I)

• Explainable driving decisions

• Adaptive driving styles based on user preferences

FAQ

Q: Will there be archival proceedings?

A: No. Neither 4 or 8 or 9 page submissions will be indexed nor have archival proceedings.

Q: Should submitted papers be anonymized?

A: Yes. If accepted, we will ask for a de-anonymized version to link on the website.

Q: My papers contains ABC, but not XYZ, is this good enough for a submission?

A: Submissions will be evaluated based on these reviewer questions.

Schedule

Saturday, December 3rd, 2022. All times are in Central Standard Time (UTC-6). Current time is

Challenge

The CARLA Autonomous Driving Challenge 2022 winners will present their solutions as part of the workshop. Details here.

Papers

Extended Abstracts

Risk Perception in Driving Scenes

Nakul Agarwal, Yi-Ting Chen

paper |

poster B2 |

youtube

Multi-Modal 3D GAN for Urban Scenes

Loïck Chambon, Mickael Chen, Tuan-Hung Vu, Alexandre Boulch, Andrei Bursuc, Matthieu Cord, Patrick Pérez

paper |

poster B9 |

slideslive

DriveCLIP: Zero-Shot Transfer for Distracted Driving Activity Understanding using CLIP

Md Zahid Hasan, Ameya Joshi, Mohammed Rahman, Archana Venkatachalapathy, Anuj Sharma, Chinmay Hegde, Soumik Sarkar

paper |

poster C2 |

slideslive

Are All Vision Models Created Equal? A Study of the Open-Loop to Closed-Loop Causality Gap

Mathias Lechner, Ramin Hasani, Alexander Amini, Tsun-Hsuan Wang, Thomas Henzinger, Daniela Rus

paper |

poster C9 |

slideslive

Rationale-aware Autonomous Driving Policy utilizing Safety Force Field implemented on CARLA Simulator

Ho Suk*, Taewoo Kim*, Hyungbin Park, Pamul Yadav, Junyong Lee, Shiho Kim

paper |

poster B1 |

slideslive |

youtube

Full Papers

Monitoring of Perception Systems: Deterministic, Probabilistic, and Learning-based Fault Detection and Identification

Pasquale Antonante, Heath Nilsen, Luca Carlone

paper |

poster B7 |

slideslive |

youtube

VN-Transformer: Rotation-Equivariant Attention for Vector Neurons

Serge Assaad, Carlton Downey, Rami Al-Rfou, Nigamaa Nayakanti, Benjamin Sapp

paper |

poster A2 |

slideslive |

youtube

Controlling Steering with Energy-Based Models

Mykyta Baliesnyi, Ardi Tampuu, Tambet Matiisen

paper |

poster A1 |

youtube

Verifiable Goal Recognition for Autonomous Driving with Occlusions

Cillian Brewitt, Massimiliano Tamborski, Stefano Albrecht

paper |

poster D6 |

slideslive

Robust Trajectory Prediction against Adversarial Attacks

Yulong Cao, Danfei Xu, Xinshuo Weng, Zhuoqing Mao, Anima Anandkumar, Chaowei Xiao, Marco Pavone

paper |

poster A7 |

slideslive |

youtube

AdvDO: Realistic Adversarial Attacks for Trajectory Prediction

Yulong Cao, Chaowei Xiao, Anima Anandkumar, Danfei Xu, Marco Pavone

paper |

poster A6 |

slideslive |

youtube

Improving Motion Forecasting for Autonomous Driving with the Cycle Consistency Loss

Titas Chakraborty, Akshay Bhagat, Henggang Cui

paper |

poster B5 |

slideslive |

youtube

VISTA: VIrtual STereo based Augmentation for Depth Estimation in Automated Driving

Bin Cheng, Kshitiz Bansal, Mehul Agarwal, Gaurav Bansal, Dinesh Bharadia

paper |

poster B3 |

slideslive |

youtube

Finding Safe Zones of Markov Decision Processes Policies

Lee Cohen, Yishay Mansour, Michal Moshkovitz

paper |

poster C8 |

slideslive |

youtube

One-Shot Learning of Visual Path Navigation for Autonomous Vehicles

Zhongying CuiZhu*, Francois Charette*, Amin Ghafourian*, Debo Shi, Matthew Cui, Anjali Krishnamachar, Iman Bozchalooi

paper |

poster A5 |

slideslive |

youtube

Stress-Testing Point Cloud Registration on Automotive LiDAR

Amnon Drory, Raja Giryes, Shai Avidan

paper |

poster D1 |

slideslive |

youtube

KING: Generating Safety-Critical Driving Scenarios for Robust Imitation via Kinematics Gradients

Niklas Hanselmann, Katrin Renz, Kashyap Chitta, Apratim Bhattacharyya, Andreas Geiger

paper |

poster D7 |

slideslive |

youtube

Fast-BEV: Towards Real-time On-vehicle Bird's-Eye View Perception

Bin Huang*, Yangguang Li*, Enze Xie*, Feng Liang*, Luya Wang, Mingzhu Shen, Fenggang Liu, Tianqi Wang, Ping Luo, Jing Shao

paper |

poster D8 |

slideslive

DiffStack: A Differentiable and Modular Control Stack for Autonomous Vehicles

Peter Karkus, Boris Ivanovic, Shie Mannor, Marco Pavone

paper |

poster D5 |

slideslive |

youtube

An Intelligent Modular Real-Time Vision-Based System for Environment Perception

Amirhossein Kazerouni*, Amirhossein Heydarian*, Milad Soltany*, Aida Mohammadshahi*, Abbas Omidi*, Saeed Ebadollahi

paper |

poster A9 |

slideslive |

youtube

PolarMOT: How Far Can Geometric Relations Take Us in 3D Multi-Object Tracking?

Aleksandr Kim, Guillem Brasó, Aljoša Ošep, Laura Leal-Taixé

paper |

poster B6 |

slideslive |

youtube

TALISMAN: Targeted Active Learning for Object Detection with Rare Classes and Slices using Submodular Mutual Information

Suraj Kothawade, Saikat Ghosh, Sumit Shekhar, Yu Xiang, Rishabh Iyer

paper |

poster D2 |

slideslive |

youtube

CW-ERM: Improving Autonomous Driving Planning with Closed-loop Weighted Empirical Risk Minimization

Eesha Kumar, Yiming Zhang, Stefano Pini, Simon Stent, Ana Sofia Rufino Ferreira, Sergey Zagoruyko, Christian Perone

paper |

poster A4 |

slideslive

Missing Traffic Data Imputation Using Multi-Trajectory Parameter Transferred LSTM

Jungmin Kwon, Hyunggon Park

poster D3 |

slideslive |

youtube

Calibrated Perception Uncertainty Across Objects and Regions in Bird's-Eye-View

Markus Kängsepp, Meelis Kull

paper |

poster D4 |

slideslive |

youtube

Imitation Is Not Enough: Robustifying Imitation with Reinforcement Learning for Challenging Driving Scenarios

Yiren Lu, Justin Fu, George Tucker, Xinlei Pan, Eli Bronstein, Rebecca Roelofs, Benjamin Sapp, Brandyn White, Aleksandra Faust, Shimon Whiteson, Dragomir Anguelov, Sergey Levine

paper |

poster B4 |

slideslive

ViT-DD: Multi-Task Vision Transformer for Semi-Supervised Driver Distraction Detection

Yunsheng Ma, Ziran Wang

paper |

poster A3 |

slideslive |

youtube

Direct LiDAR-based Object Detector Training from Automated 2D Detections

Robert McCraith, Eldar Insafutdinov, Lukas Neumann, Andrea Vedaldi

paper |

poster D9 |

slideslive

Safe Real-World Autonomous Driving by Learning to Predict and Plan with a Mixture of Experts

Stefano Pini, Christian Perone, Aayush Ahuja, Ana Sofia Rufino Ferreira, Moritz Niendorf, Sergey Zagoruyko

paper |

poster C7 |

slideslive

PlanT: Explainable Planning Transformers via Object-Level Representations

Katrin Renz, Kashyap Chitta, Otniel-Bogdan Mercea, Almut Sophia Koepke, Zeynep Akata, Andreas Geiger

paper |

poster C3 |

slideslive |

youtube

Distortion-Aware Network Pruning and Feature Reuse for Real-time Video Segmentation

Hyunsu Rhee, Dongchan Min, Sunil Hwang, Bruno Andreis, Sung Ju Hwang

paper |

poster A10 |

slideslive |

youtube

Improving Predictive Performance and Calibration by Weight Fusion in Semantic Segmentation

Timo Sämann, Ahmed Mostafa Hammam, Andrei Bursuc, Christoph Stiller, Horst-Michael Groß

paper |

poster C4 |

slideslive

GNM: A General Navigation Model to Drive Any Robot

Dhruv Shah*, Ajay Sridhar*, Arjun Bhorkar, Noriaki Hirose, Sergey Levine

paper |

poster D10 |

slideslive

Enhancing System-level Safety in Autonomous Driving via Feedback Learning

Sin Yong Tan, Weisi Fan, Qisai Liu, Tichakorn Wongpiromsarn, Soumik Sarkar

paper |

poster C10 |

slideslive |

youtube

Analyzing Deep Learning Representations of Point Clouds for Real-Time In-Vehicle LiDAR Perception

Marc Uecker, Tobias Fleck, Marcel Pflugfelder, Marius Zöllner

paper |

poster C6 |

slideslive

CAMEL: Learning Cost-maps Made Easy for Off-road Driving

Kasi Viswanath, Sujit Baliyarasimhuni, Srikanth Saripalli

paper |

poster A8 |

slideslive |

youtube

A Versatile and Efficient Reinforcement Learning Approach for Autonomous Driving

Guan Wang, Haoyi Niu, Desheng Zhu, Jianming Hu, Xianyuan Zhan, Guyue Zhou

paper |

poster C5 |

slideslive

Uncertainty-Aware Self-Training with Expectation Maximization Basis Transformation

Zijia Wang, Wenbin Yang, Zhi-Song Liu, Zhen Jia

paper |

poster B10 |

slideslive |

youtube

Potential Energy based Mixture Model for Noisy Label Learning

Zijia Wang, Wenbin Yang, Zhi-Song Liu, Zhen Jia

paper |

poster C1 |

slideslive |

youtube

A Graph Representation for Autonomous Driving

Zerong Xi, Gita Sukthankar

paper |

poster B8 |

slideslive |

youtube

Location

Theater B, 2nd floor New Orleans Convention Center, 900 Convention Center Boulevard, New Orleans, Louisiana 70130, United States

Organizers

-

Jiachen Li jiachen_li@stanford.edu

is a Postdoctoral Scholar at Stanford University working on scene understanding and decision making for intelligent systems.

-

Nigamaa Nayakanti nigamaa@waymo.com

is a research scientist at Waymo working on behavior prediction and modeling for autonomous vehicles.

-

Xinshuo Weng xweng@nvidia.com

is a Research Scientist at NVIDIA Autonomous Vehicle Research working on 3D computer vision and generative models in the context of autonomous driving.

-

Daniel Omeiza daniel.omeiza@cs.ox.ac.uk

is a PhD student at the University of Oxford working on explainability in autonomous vehicles.

-

Ali Baheri ali.baheri@mail.wvu.edu

is an assistant professor at West Virginia University working on machine learning, control, and data-driven optimization.

-

German Ros german.ros@intel.com

is the lead for Intel Autonomous Agents Labs.

Challenge Organizers

-

German Ros german.ros@intel.com

is the lead for Intel Autonomous Agents Labs.

-

Guillermo Lopez

is a software engineer at CVC & Embodied AI Foundation

-

Joel Moriana

is a software engineer at CVC & Embodied AI Foundation

-

Jacopo Bartiromo

is a software engineer at CVC & Embodied AI Foundation

-

Vladlen Koltun

is a Distinguished Scientist at Apple.

Advisors

-

Rowan McAllister rowan.mcallister@tri.global

is a research scientist and manager at Toyota Research Institute working on probabilistic models and motion planning for autonomous vehicles.

Program Committee

We thank those who help make this workshop possible!

•

Aayush Ahuja

•

Maram Akila

•

Elahe Arani

•

Bahar Azari

•

Junchi Bin

•

Daniel Bogdoll

•

Jaekwang Cha

•

Changhao Chen

•

Zheng Chen

•

Wenhao Ding

•

Christopher Diehl

•

Nemanja Djuric

•

Praneet Dutta

•

Hesham Eraqi

•

Meng Fan

•

Shivam Gautam

•

Paweł Gora

•

Simon Gustavsson

•

Michael Hanselmann

•

Jeffrey Hawke

•

Hengbo Ma

•

Xiaobai Ma

•

Marcin Możejko

•

Amitangshu Mukherjee

•

Sauradip Nag

•

Maximilian Naumann

•

Patrick Nguyen

•

Yaru Niu

•

Tanvir Parhar

•

Nishant Rai

•

Gaurav Raina

•

Daniele Reda

•

Kasra Rezaee

•

Enna Sachdeva

•

Mark Schutera

•

Adam Scibior

•

Daniel Sikar

•

Apoorv Singh

•

Sahib Singh

•

Ruobing Shen

•

Tianyu Shi

•

Deepak Singh

•

Abhishek Sinha

•

Ibrahim Sobh

•

Zhaoen Su

•

Shreyas Subramanian

•

Ho Suk

•

Chen Tang

•

Toan Tran

•

Ankit Vora

•

Ákos Utasi

•

Letian Wang

•

Chenfeng Xu

•

Yan Xu

•

Xinchen Yan

•

Xi Yi

•

Senthil Yogamani

•

Jiakai Zhang

•

Jiacheng Zhu

Sponsors

We thank Waymo for generously sponsoring three student travel awards and the virtual poster session.